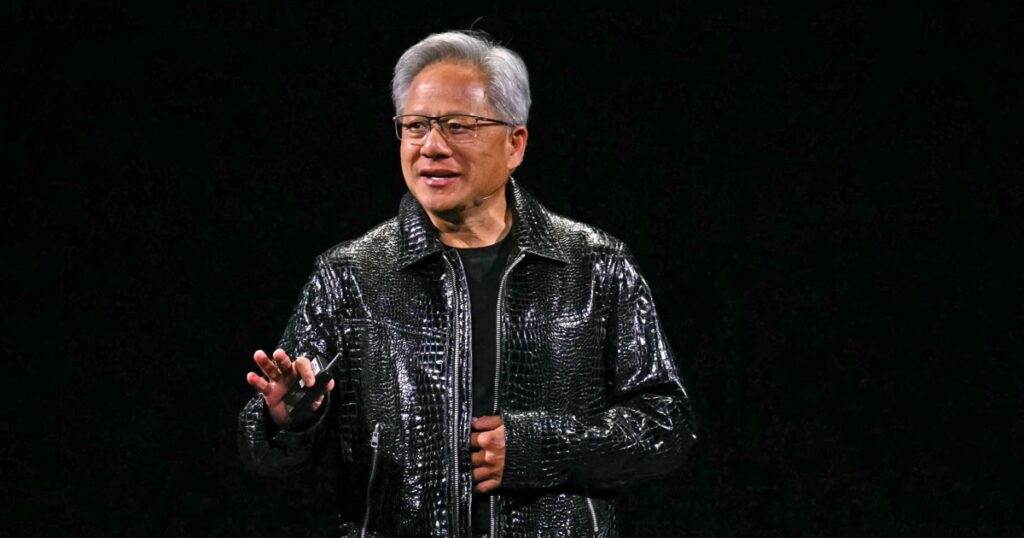

Nvidia CEO Jensen Huang said that next-generation AI requires 100 times more calculations than older models as a result of a new inference approach to thinking “about the best answer.”

“The amount of calculation required to do that inference process is 100 times more than what we had done before,” Huang told CNBC’s Jon Fortt in an interview Wednesday following the chipmaker’s fourth quarter revenue report.

He cited models that include Deepseek’s R1, Openai’s GPT-4, and Xai’s Grok 3 as models that use the inference process.

Nvidia reported results that outperformed analyst estimates, with revenues rising 78% from the previous year to $39.33 billion. Datacenters, including NVIDIA’s market-leading graphics processing units (GPUs), have skyrocketed between 93% and $35.6 billion for artificial intelligence workloads, and now accounts for more than 90% of total revenue.

The company’s shares have not recovered after losing 17% of its value on January 27th. It’s the worst drop since 2020. Concerns raised by the Deepseek in China’s AI Lab could potentially get greater performance with AI at much lower infrastructure costs.

Huang pushed the idea back in an interview Wednesday, saying Deepseek has popularized the inference model that requires more chips.

“Deepseek was amazing,” Huang said. “It opened up a model of reasoning that is absolutely world class, so it was fantastic.”

Nvidia is restricting its business in China due to increased export controls at the end of the Biden administration.

Huang said export restrictions have reduced the company’s share of revenue in China by about half, adding that there are other competitive pressures, including Huawei.

Developers are likely to search for methods of export control via software, including supercomputers, personal computers, phones, and gaming consoles, Huang said.

“In the end, software finds a way,” he said. “In the end, you’ll run that software on a system that’s targeting it and create great software.”

Huang said Nvidia’s GB200, sold in the US, can generate AI content 60 times faster than the version of the chips of the company that sells to China under export control.

Nvidia spends billions of dollars of infrastructure spending every year on revenue from the world’s largest tech companies. The company is the biggest beneficiary of the AI boom, with revenues more than doubled in five quarters until mid-2024, with growth slowing slightly.